Tech - ESXi to Proxmox the real migration

Let's cut around the fat here... the Broadcom takeover of VMware has had a huge impact. For some businesses, it's not cost-effective to keep a VMware environment anymore...

This has been on the Geekden cards for a long time..... well before the entire Broadcom acquisition.

<rant>

Just want to take the time to make this note(rant)

If you are sitting here reading this with the mindset

'This doesn't affect me'

'I don't see our business going with Proxmox'

'It's not my decision...'

If that's you. Simple advise. Get your head out of your AR**.

This is new learning and as an IT professional, you should always want to learn new things...

</rant>

Here are the requirements that I had to use:

Supported by the vendor.

Upgraded without downtime.

Ideally Open Source.

The ability to deal with host failures with HA.

The smallest amount of downtime.

vSAN alternatives.

Proxmox checked all the boxes.

BEFORE ANYONE SAYS well what about x Hypervisor.

That's not really the point.

I needed something that was going to work, that was open-source, and looked and behaved like ESX/vCenter.

Proxmox simply checked all the boxes.

Good to know points:

Veeam Backup and Replication at the time of writing this article April 2024 does not support Proxmox.

But Proxmox has its own open-source version of it! No more worrying about the silly pricing of Veeam now.... Backup all the things!

There isn't a vCenter as you should know. It's all baked into each host.

Like the ESXI standalone client just more functional, basically, the more hosts you have the more vCenters you have.

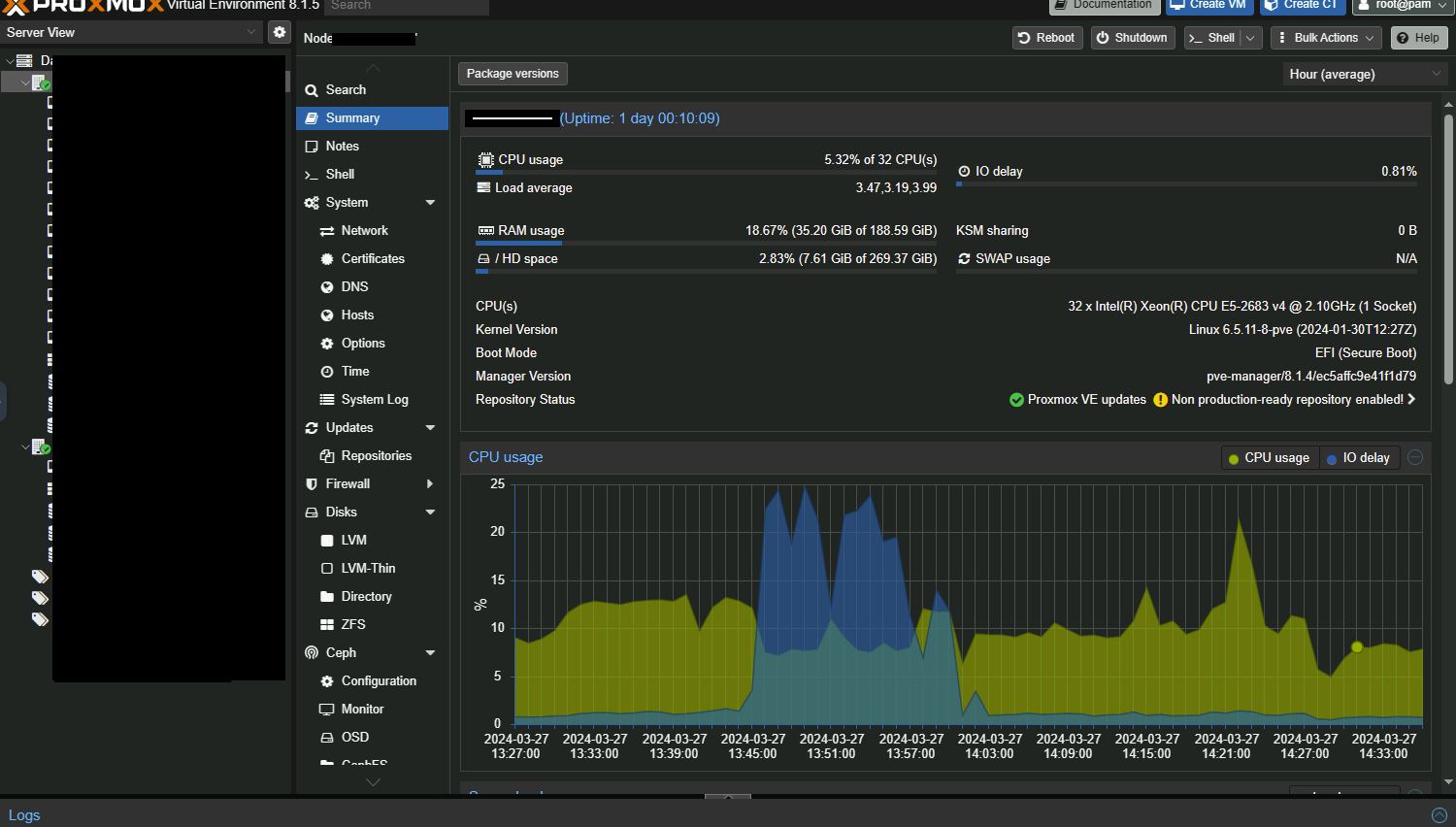

Let's talk about installing Proxmox.

Coming from a heavy ESX/vCenter-focused environment I was worried that if I did want to replace it I would have faced many issues.

The installation of Proxmox is simple like ESX but needs to be installed on Physical SSDs or HDDs.

No SD Cards/USB Drives or SAN boots. < Personally I feel if you use one of these you are asking for trouble but that's my 2 cents.

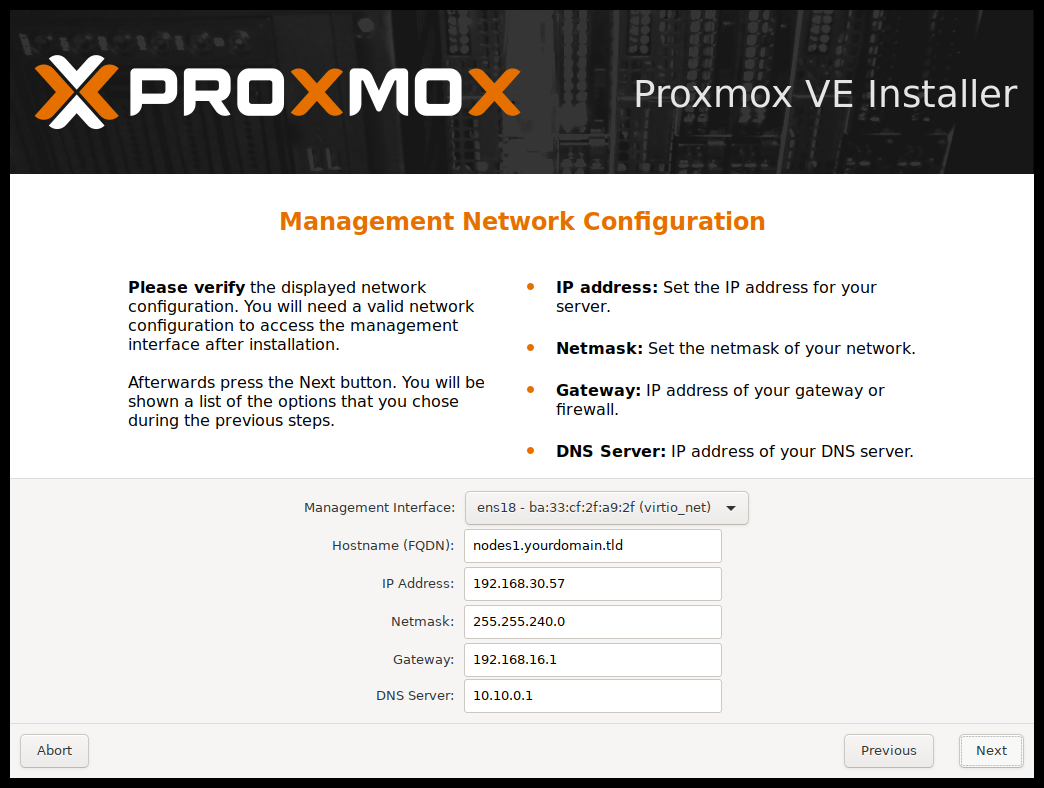

If you've installed an ESX host before then this should be really simple. Download the ISO from the Proxmox site and then use Rufus or Etcher on a USB drive. Boot it.

Getting to this stage all of this is easy to fill out and reboot. If you so wish you can leave it to DHCP if you are so insane.

Migrating

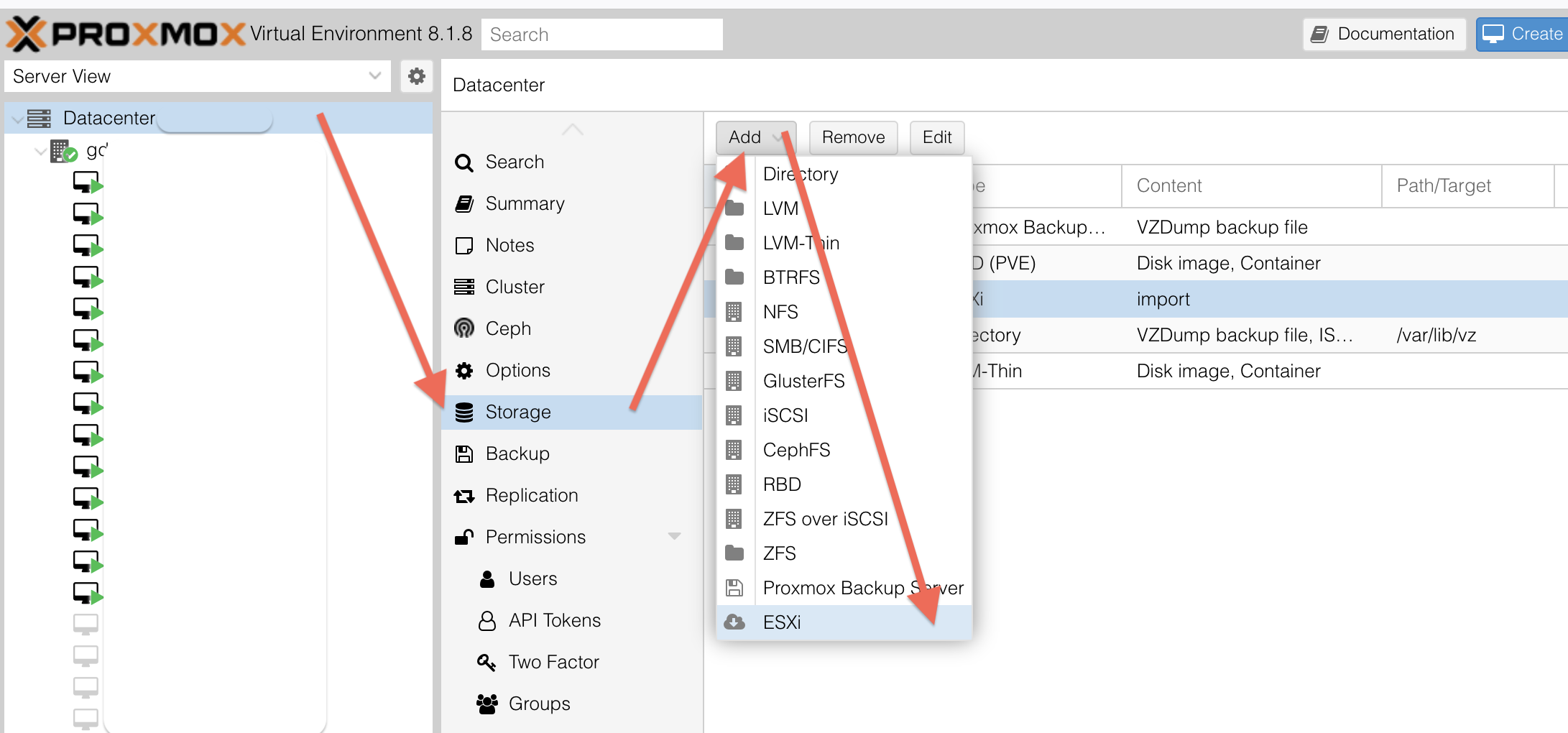

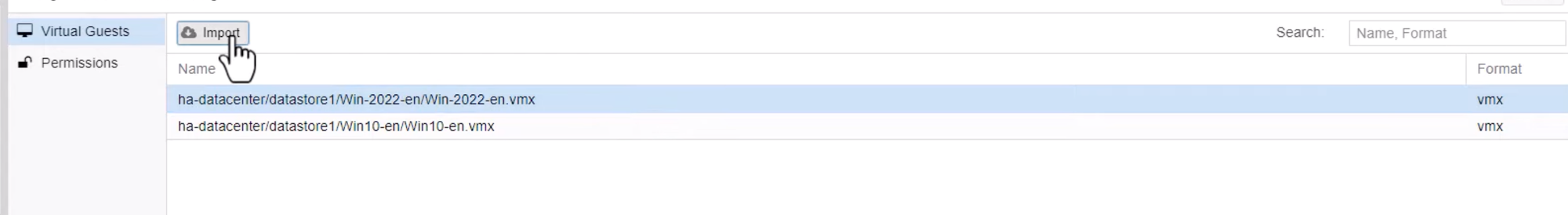

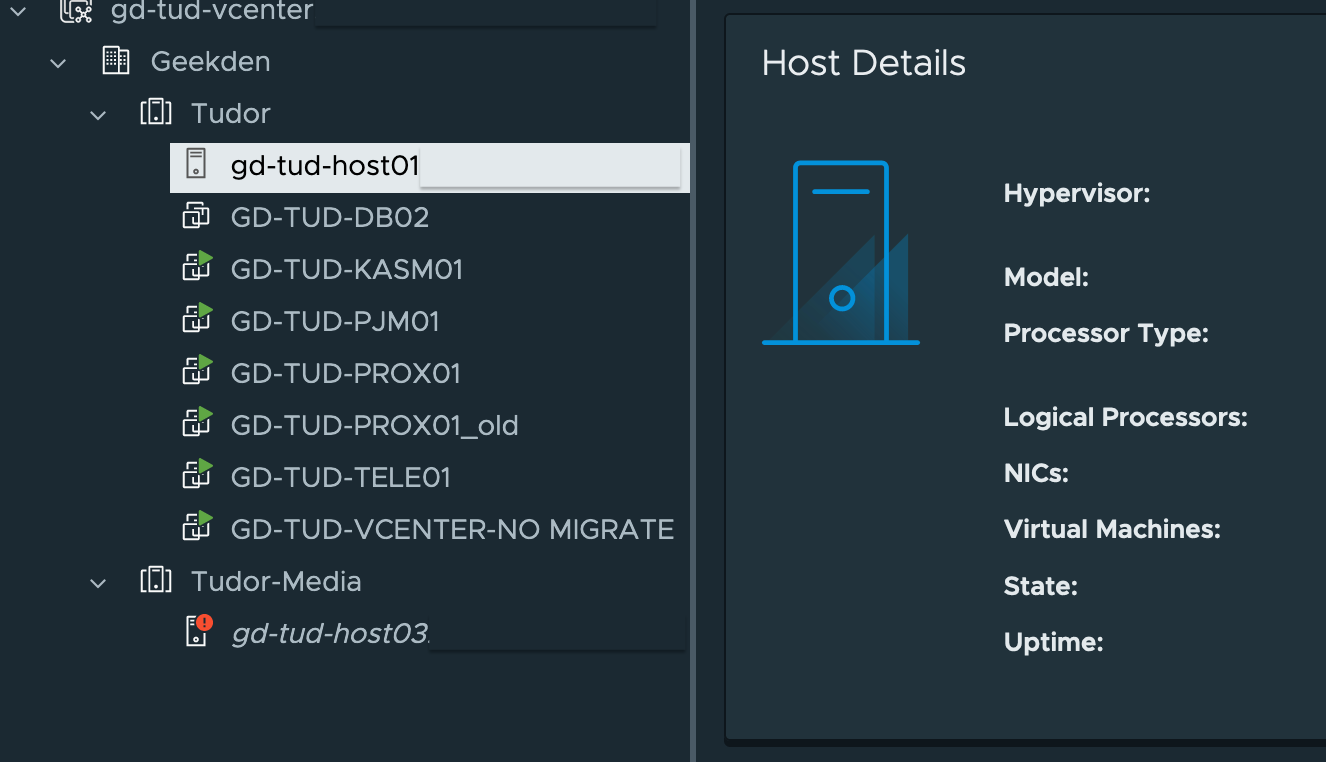

Since version 8.1.7 there has been a large jump in migrating from ESXi to Proxmox, we can now from the UI migrate from ESXi to Proxmox completely within the UI.

Once you've added your ESX server you can then choose to migrate away from the host that has been added.

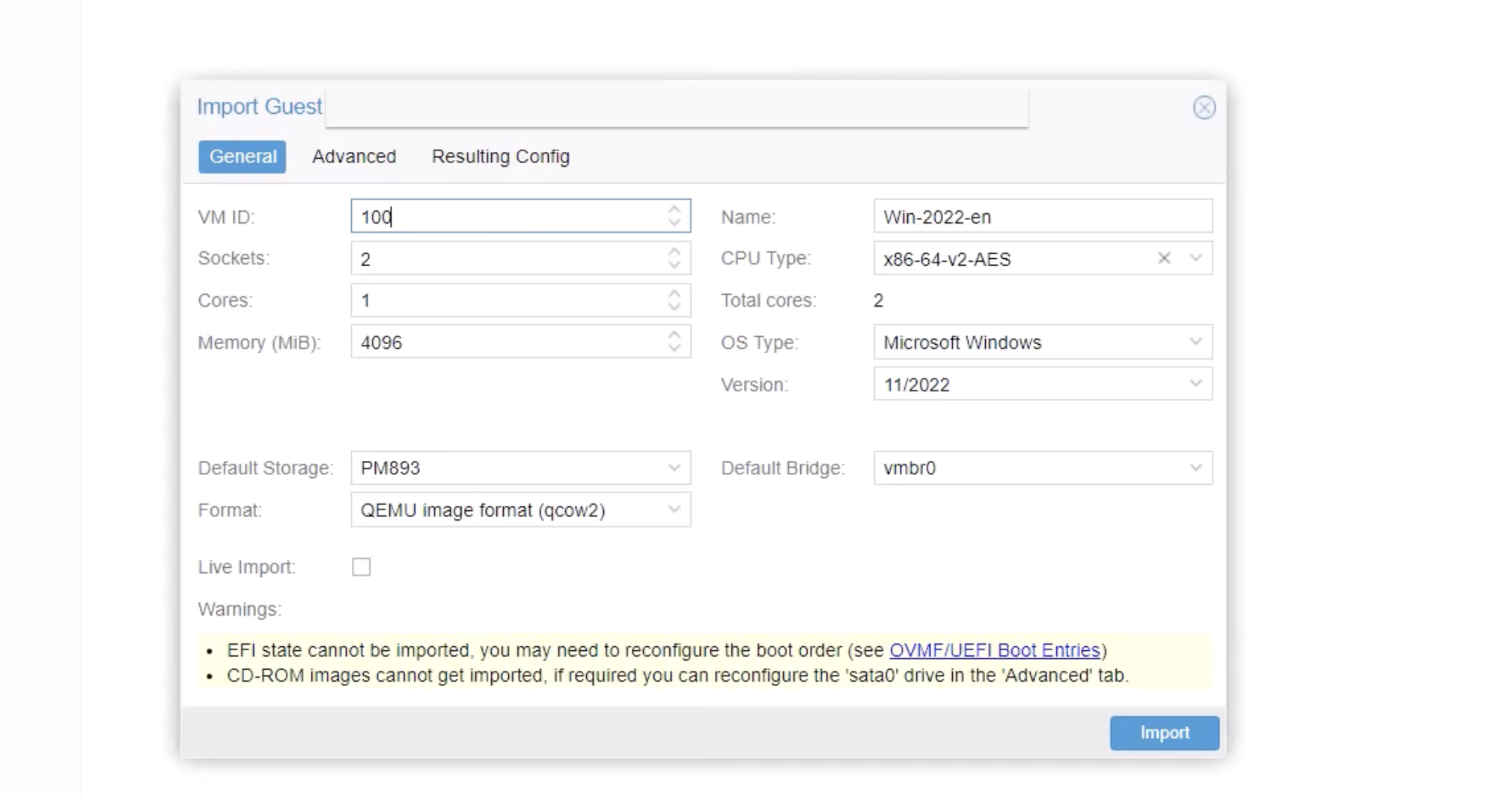

Import the VMX.

Before you choose to migrate you need to ensure that the source VM is powered off.

So the migration will take around 100 VMs around a week. Each VM depending on the size will have downtime. So that's important to keep in mind.

Our biggest VM is 2TiB which took around 1 hour to complete the migration.

Post Migration

Linux Guests

Following the migration to Proxmox for your Linux hosts, your networking will need updating.

Unlike VMware, Proxmox uses a differing naming scheme for Network Interface Cards so for example if you had interface ens180 you might find that now under Proxmox this has changed to ens18.

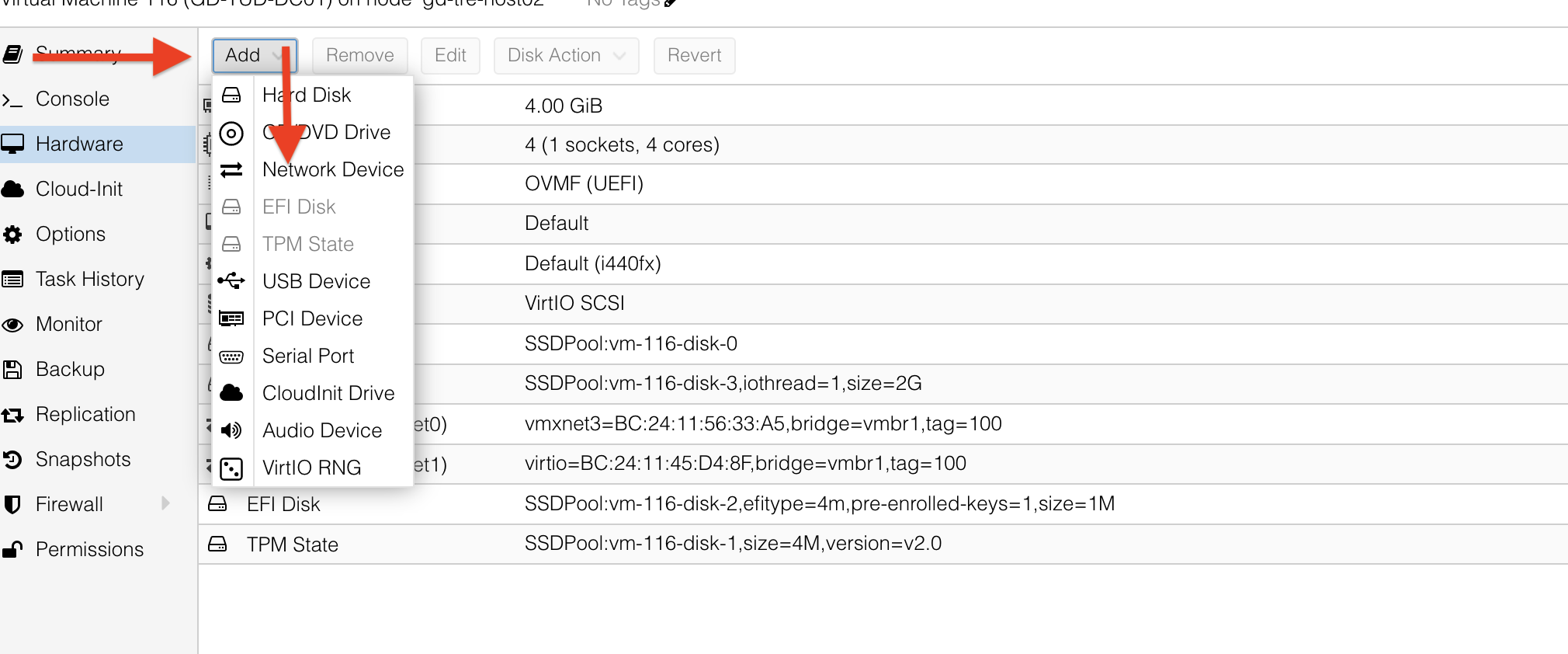

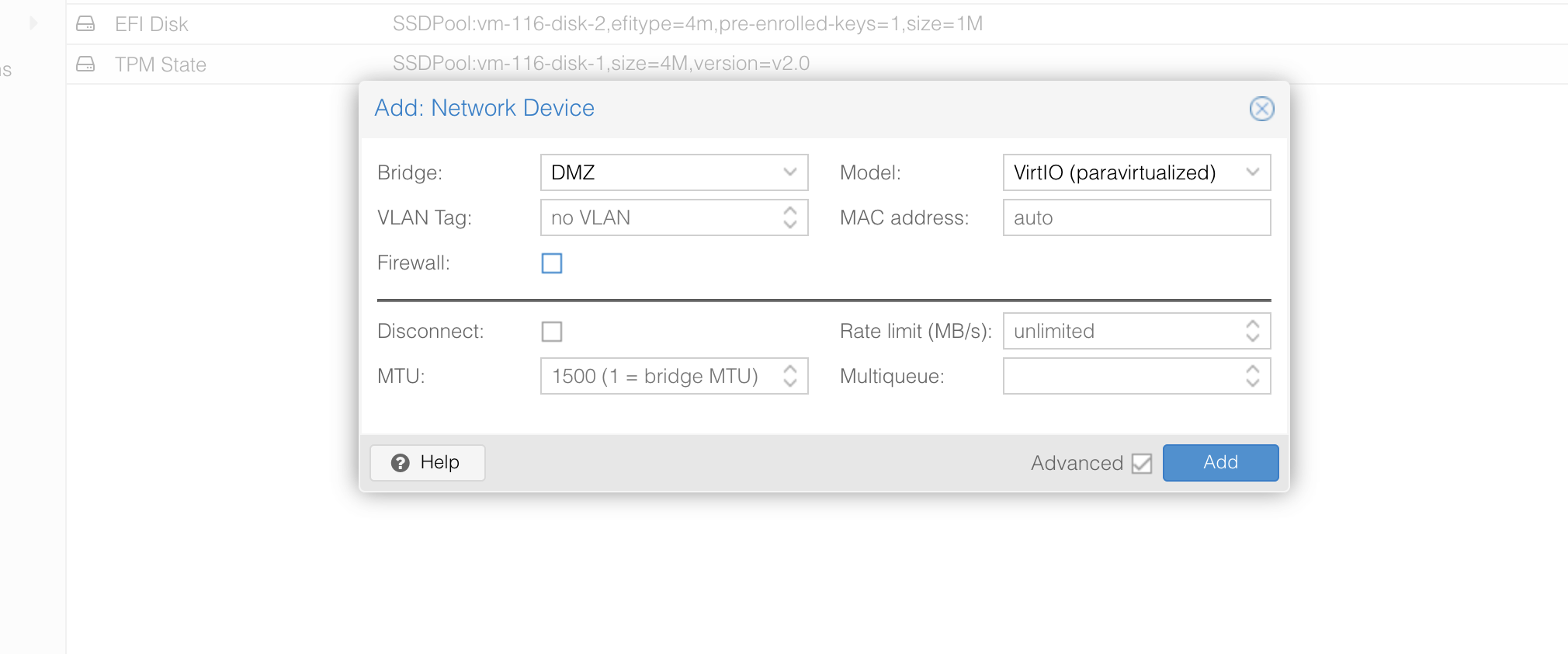

If you are using DHCP for anything it's just as simple as adding a new NIC:

I use the VirtIO NIC as I found the performance of this NIC to be the best.

Windows Guests

Once again, the same issue as the Linux Hosts with the re-assigning NICs.

But a new issue I found was following the migration to Proxmox I couldn't get Windows Server to boot.

When attempting to start Windows would simply hit a BugCheck with the exception:

'Inaccessible boot device'

Thankfully on the forums some other people had the same issues.

Following importing from ESX in this example VM number is defined as x this will differ for you.

qm set x --bios ovmf

Add an extra disk on virtio scsi controller and change the boot disk's controller to SATA:

sed -i 's/scsi/sata/g' /etc/pve/qemu-server/x.conf

Set the boot device at options to the SATA disk.

Boot, install virtio driver. Shut down.

Change boot disk's (and other disks too if there are) controller to virtio scsi:

sed -i 's/sata/scsi/g' /etc/pve/qemu-server/x.conf

Change the boot device at options, and boot.

Why?

This issue is due to the virtio drivers not being installed so the applicable drivers cannot find the Windows Partition.

The Ending

So finally we arrive at the last few.

By this time most of the important items have been migrated off the cluster and the 2 hosts are running Proxmox.

I was also retiring an old SAN and migrating to Ceph so for me in this migration before this final host was shut down I needed to make sure that ALL my required VMs were fully migrated off.

That's it. Once you are happy and passed post-migration tests it's up to you when to shutdown ESX.